Scraping Amazon without proxies is a losing battle. You'll quickly hit IP bans, encounter endless CAPTCHAs, and get rate-limited before you collect anything useful. Amazon’s anti-bot systems are some of the toughest on the internet.

To stay under the radar, proxies are essential. In this article, we’ll show you how to scrape Amazon efficiently using Nstproxy’s Residential Proxies — a reliable way to extract product data without getting blocked.

Why You Need Proxies to Scrape Amazon

Amazon uses aggressive anti-scraping techniques:

- IP tracking & rate limiting

- Bot behavior detection

- Geo-blocking

- JavaScript challenges & CAPTCHAs

Without proxies, sending multiple requests from a single IP will quickly get you flagged.

Residential proxies like those from Nstproxy simulate real user traffic from actual devices and ISPs, making your scraper look legitimate.

Why Choose Nstproxy Residential Proxies?

Nstproxy provides:

- 90M+ IPs from 195+ countries

- SOCKS5 & HTTP/HTTPS support

- Session control for sticky or rotating IPs

- Optimized gateway routing (US, EU, APAC)

✅ Ideal for large-scale Amazon scraping, price monitoring, and competitor research.

Best Proxy Type for Amazon: Residential with Rotation

Proxy Types Comparison:

| Proxy Type | Pros | Cons |

|---|---|---|

| Datacenter | Fast | Easily blocked |

| Mobile | Extremely anonymous | Expensive |

| Residential | High anonymity, affordable, effective | Best balance for Amazon use |

🔹 For Amazon scraping, rotating residential proxies are most effective — they offer real IPs from real users, rotating periodically to avoid detection.

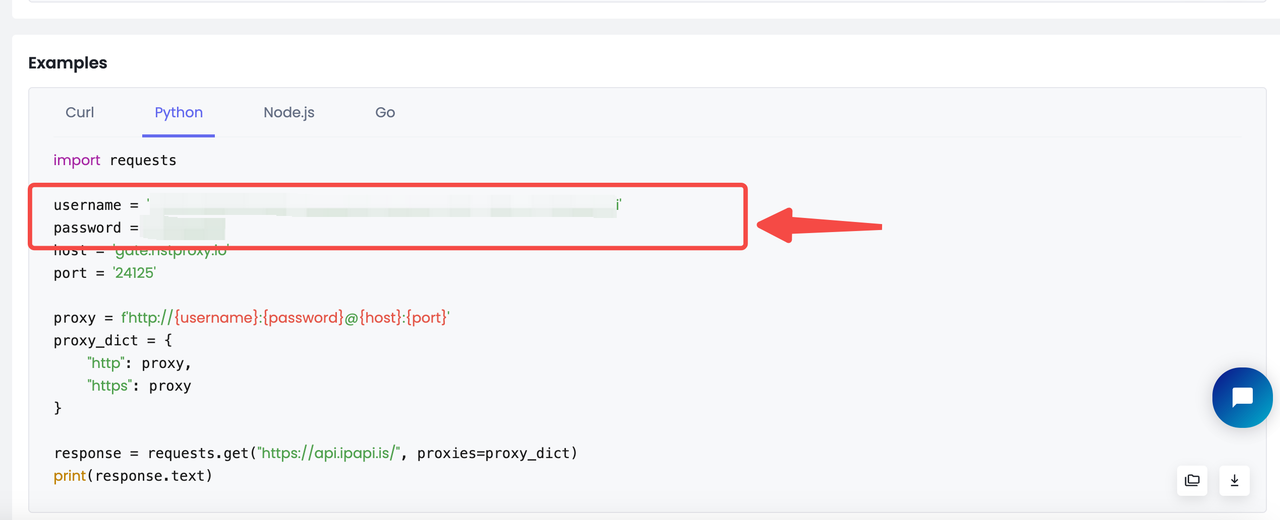

Setting Up Nstproxy with Python

Once you've purchased a proxy plan:

- Go to Proxy Setup in the dashboard

- Select Python from code language options

- Copy the ready-to-use snippet with your credentials

Here’s how to use Nstproxy residential proxies in a Python scraper with the requests library:

username = 'YourUsername' password = 'YourPassword' host = 'gate.nstproxy.io' port = '24125' proxy = f'http://{username}:{password}@{host}

Full Amazon Scraping Script Example

Here is the whole scripts for Amazon scraping looks like with https://www.amazon.com/s?k=apple :

import requests from urllib.parse import urljoin from bs4 import BeautifulSoup import pandas as pd username = 'YOUR Nstproxy Username' password = 'Your password' host = 'gate.nstproxy.io' port = '24125' proxy = f'http://{username}:{password}@{host}:{port}' proxies = { "http": proxy, "https": proxy } custom_headers = { 'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/138.0.0.0 Safari/537.36', 'Accept-Language': 'da, en-gb, en', 'Accept-Encoding': 'gzip, deflate, br', 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,*/*;q=0.7', 'Referer': 'https://www.google.com/' } def parse_listing(listing_url, visited_urls, current_page=1, max_pages=2): resp = requests.get( listing_url, headers=custom_headers, proxies=proxies ) print(resp.status_code) soup_search = BeautifulSoup(resp.text, 'lxml') link_elements = soup_search.select( '[data-cy="title-recipe"] > a.a-link-normal' ) page_data = [] for link in link_elements: full_url = urljoin(listing_url, link.attrs.get('href')) if full_url not in visited_urls: visited_urls.add(full_url) print(f'Scraping product from {full_url[:100]}', flush=True) product_info = get_product_info(full_url) if product_info: page_data.append(product_info) time.sleep(random.uniform(3, 7)) next_page_el = soup_search.select_one('a.s-pagination-next') if next_page_el and current_page < max_pages: next_page_url = next_page_el.attrs.get('href') next_page_url = urljoin(listing_url, next_page_url) print( f'Scraping next page: {next_page_url}' f'(Page {current_page + 1} of {max_pages})', flush=True ) page_data += parse_listing( next_page_url, visited_urls, current_page + 1, max_pages ) return page_data def get_product_info(url): resp = requests.get(url, headers=custom_headers, proxies=proxies) if resp.status_code != 200: print(f'Error in getting webpage: {url}') return None soup = BeautifulSoup(resp.text, 'lxml') title_element = soup.select_one('#productTitle') title = title_element.text.strip() if title_element else None price_e = soup.select_one('#corePrice_feature_div span.a-offscreen') price = price_e.text if price_e else None rating_element = soup.select_one('#acrPopover') rating_text = rating_element.attrs.get('title') if rating_element else None rating = rating_text.replace('out of 5 stars', '') if rating_text else None image_element = soup.select_one('#landingImage') image = image_element.attrs.get('src') if image_element else None description_element = soup.select_one( '#productDescription, #feature-bullets > ul' ) description = ( description_element.text.strip() if description_element else None ) return { 'title': title, 'price': price, 'rating': rating, 'image': image, 'description': description, 'url': url } def main(): visited_urls = set() search_url = 'https://www.amazon.com/s?k=apple' data = parse_listing(search_url, visited_urls) df = pd.DataFrame(data) df.to_csv('apple.csv', index=False) if __name__ == '__main__': main()

Tips for Scraping Amazon Successfully

- Rotate User-Agents — Avoid using the same one repeatedly

- Use Sessions Wisely —

sessionDurationfor stickiness (e.g., logins) - Random Delays —

time.sleep(random.uniform(1, 3)) - Error Handling — Retry on

503/429with exponential backoff - CAPTCHA Detection — Switch to a new session when triggered

Challenges and Solutions

| Challenge | Solution |

|---|---|

| IP blocks | Use Nstproxy rotating residential proxies |

| Rate limiting | Add delays and rotate IPs & headers |

| Structure changes | Inspect DOM manually, adjust selectors accordingly |

Final Thoughts

Scraping Amazon is hard — but not impossible. With Nstproxy’s residential proxies, rotating IPs, and realistic browsing behavior, you can collect high-value product data at scale without getting blocked.

🚀 Start your Nstproxy trial today — 5GB free Need help with integration? We’re here to assist.